Hello, i’m searching for tips by experienced users or (creator) to which library setting overrides are best for my use case:

I have a very large home library of 3.23 TB with 88,077 folders and 1,277,615 files.

This backup has photos and videos and many, if not all of them are largely and repeatedly deduplicated times and times again. The worst part is the wrong or nonexistent metadata like “date of creation” of the files. Old photos and videos with low resolution from old machines/mobile phones.

Why did this happen? Inexperience, many users dealing with same data (human error), repeated and redundant backups for security, some lost original metadata because of different OS and software issues, whatsapp/telegram/others deduplicates, photo editing, etc.

My goal is to keep or identify the original files and eliminate deduplicates without losing old photos and videos and the correct timeline. i need to identify which setting are best for performance reducing times of imports, and which settings are best for identifying original files and reducing all deduplicates.

The worst problems/difficulties i’m trying to make the best of it:

1 - best performance for my setup in imports

2 - identify deduplicate files that lose creation date and became older than original files (big problem)

3 - identify deduplicate files with later date but higher resolution/size than original file (wich one is wich?)

3 - identify and rename different photos with exact same date (lost or wrong metadata)

4 - identify same photos with different filenames and dates, and sometimes different sizes/resolution

5 - not losing photoburst with over sensitive deduplicating matches. (if i need to make choices this is the least important)

My setup is:

UNRAID (HIPERVISOR)

Using DOCKER for Photostructure

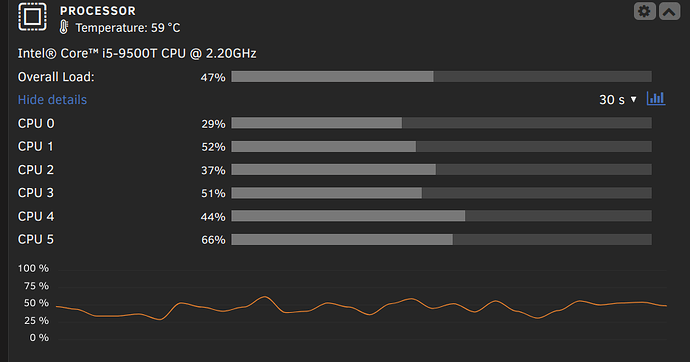

CPU Intel® Core™ i5-9500T 2.20GHz 6 cores 6 threads.

Memory RAM 32 GiB DDR4

Nvidia RTX 1060 3GB

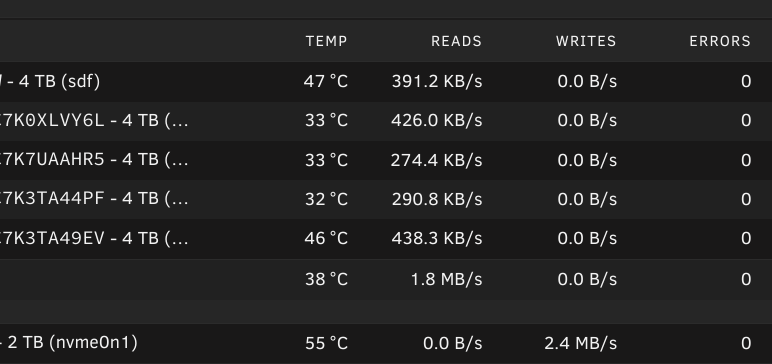

Cache Crucial P5 Plus 2TB PCIe NvME for Photostructure library import and appdata

ZFS stripe5 - 5x - Western Digital 4TB Purple 5400rpm SATA III 64MB for my old unorganized library

This system can be 90% dedicated to photostructure because it´s my priority.

I have good read/write bandwidth and fair enough cpu power for this application.

This is my latest library import config:

- 28 library setting overrides

Source:

/ps/library/.photostructure/settings.toml

Settings:

-

PS_ENABLE_ARCHIVE=true -

PS_ENABLE_DELETE=true -

PS_ENABLE_EMPTY_TRASH=true -

PS_ENABLE_REMOVE=true -

PS_ENABLE_REMOVE_ASSETS=true -

PS_KEYWORD_BLOCKLIST=[] -

PS_MAX_ASSET_FILE_SIZE_BYTES=10000000000 -

PS_MIN_ASSET_FILE_SIZE_BYTES=15000 -

PS_MIN_IMAGE_DIMENSION=240 -

PS_MIN_VIDEO_DIMENSION=120 -

PS_MIN_VIDEO_DURATION_SEC=1 -

PS_REJECT_RATINGS_LESS_THAN=0 -

PS_FUZZY_YEAR_PARSING=true -

PS_USE_STAT_TO_INFER_DATES=false -

PS_VARIANT_SORT_CRITERIA_POWER=0.3 -

PS_ALLOW_USER_AGENT=true -

PS_EMAIL=xxxxx@xxxxx.com -

PS_REPORT_ERRORS=true -

PS_MAX_ERRORS_PER_DAY=5 -

PS_MATCH_SIDECARS_FUZZILY=true -

PS_WRITE_METADATA_TO_SIDECARS_IF_IMAGE=false -

PS_WRITE_METADATA_TO_SIDECARS_IF_SIDECAR_EXISTS=false -

PS_AUTO_REFRESH_LICENSE=true -

PS_PICK_PLAN_ON_WELCOME=true -

PS_ASSET_PATHNAME_FORMAT=y/MM/yMMdd_HHmmss.EXT -

PS_EXCLUDE_NO_MEDIA_ASSETS_ON_REBUILD=false -

PS_SYNC_REPORT_RETENTION_COUNT=30 -

PS_AUTO_UPDATE_CHECK=true -

no system setting overrides

-

Some volumes are missing UUIDs

-

Storage volumes are OK* ``

-

PhotoStructure is not running as root

-

PhotoStructure is up to date

``

-

CPU utilization is 3%

-

Operating system is OK

Tools

-

SQLite is OK

-

ExifTool is OK

-

jpegtran is OK

-

Sharp is OK

-

Node.js is OK

-

HEIF images will be imported

-

Videos will be imported

Resume:

Are some of my settings wrong for my use case?

Which settings are recommended?

Which settings are best practice?

Which settings are best for full power performance without import errors or server crash?

Thanks to the creator, great professional and human, and thanks to the community!