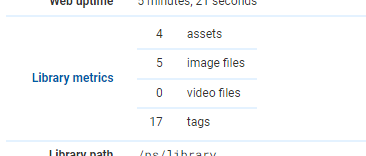

Expected Behavior

These five images should be combined into one or two assets (including according to info ), but they’re not actually displayed that way on UI.

- /20200427_225538.jpg = original portrait

- /20200427_225616.jpg = original landscape

- /107918631_157209.jpg = compressed portrait

- /806525515_15743.jpg = compressed landscape

- /review/806525515_15743.jpg = review copy of compressed landscape from parent directory, only change being the additional tag “review20221105”

Current Behavior

I actually see four assets on the UI; only the “compressed portrait” and “compressed landscape” images were combined.

Per What do you mean by "deduplicate"? | PhotoStructure I review the info command output and found that PhotoStructure does indeed think the two compressed images should be combined – but it also thinks many other asset variations should also be combined. And it does not think the two original images should be combined.

Expected output from info queries:

Set alias

xxxxx@xxxxx:/$ alias phstr='sudo docker exec -u node -it photostructure-server-alpha-20221106 ./photostructure'

Don't combine original portrait & original landscape

xxxxx@xxxxx:/$ phstr info /pictures/20200427_225538.jpg /pictures/20200427_225616.jpg

{

fileComparison: 'These files represent different assets: captured-at 2020042722553840±10ms != 2020042722561648±10ms',

primary: undefined,

imageHashComparison: {

imageCorr: 0.74,

aRotation: 90,

colorCorr: 0.97,

meanCorr: 0.82,

greyscale: false

},

...

Do combine original portrait & compressed portrait

xxxxx@xxxxx:/$ phstr info /pictures/20200427_225538.jpg /pictures/107918631_157209.jpg

{

fileComparison: 'These two files will be aggregated into a single asset.',

primary: '/pictures/20200427_225538.jpg',

imageHashComparison: {

imageCorr: 0.91,

aRotation: 90,

colorCorr: 1,

meanCorr: 0.94,

greyscale: false

},

...

Do combine original landscape & compressed landscape

xxxxx@xxxxx:/$ phstr info /pictures/20200427_225616.jpg /pictures/806525515_15743.jpg

{

fileComparison: 'These two files will be aggregated into a single asset.',

primary: '/pictures/20200427_225616.jpg',

imageHashComparison: {

imageCorr: 0.93,

aRotation: 0,

colorCorr: 1,

meanCorr: 0.95,

greyscale: false

},

...

Do combine compressed landscape & review copy

xxxxx@xxxxx:/$ phstr info /pictures/review/806525515_15743.jpg /pictures/806525515_15743.jpg

{

fileComparison: 'These two files will be aggregated into a single asset.',{

fileComparison: 'These two files will be aggregated into a single asset.',

primary: '/pictures/review/806525515_15743.jpg',

imageHashComparison: {

imageCorr: 1,

aRotation: 0,

colorCorr: 1,

meanCorr: 1,

greyscale: false

},

...

Unexpected but acceptable output from info queries:

Do combine compressed landscape & compressed portrait

xxxxx@xxxxx:/$ phstr info /pictures/107918631_157209.jpg /pictures/806525515_15743.jpg

{

fileComparison: 'These two files will be aggregated into a single asset.',

primary: '/pictures/806525515_15743.jpg',

imageHashComparison: {

imageCorr: 0.7,

aRotation: 0,

colorCorr: 0.97,

meanCorr: 0.79,

greyscale: false

},

...

Steps to Reproduce

See the five images in zip attachment to support@photostructure.com. I don’t believe the metadata has anything I want to keep private, including GPS data, but it’s over 4MB so the forum won’t let me attach it.

- Extract the five images

- Run PhotoStructure and let it build the library

- (probably not related, but I did “Shutdown” and change environment variables a few times to try to get it to process faster, then after starting PhotoStructure again clicked “Resync” to get it to continue with the import process)

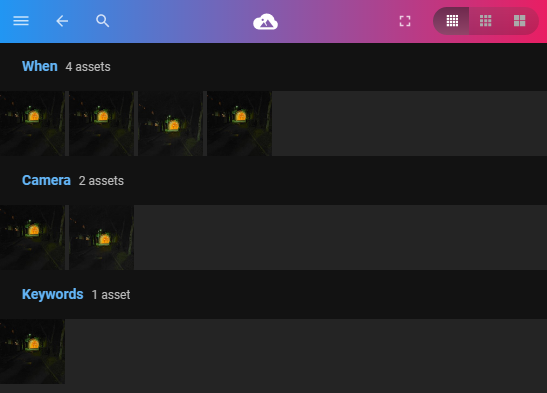

- Look for the images and see they aren’t combined. I checked both by searching via When, and also via Keyword “review20221105”.

Environment

Synology DS920+, DSM 7.1.1-42962 Update 1

PhotoStructure 2.1.0-alpha.7 on Docker