I can do that but here is what I’ve done now, its running (much much faster too). But can you tell me if I’ve maybe done something that has broken it in a new and interesting way?

- Moved previews to ssd per this post: Troubleshooting slow performance

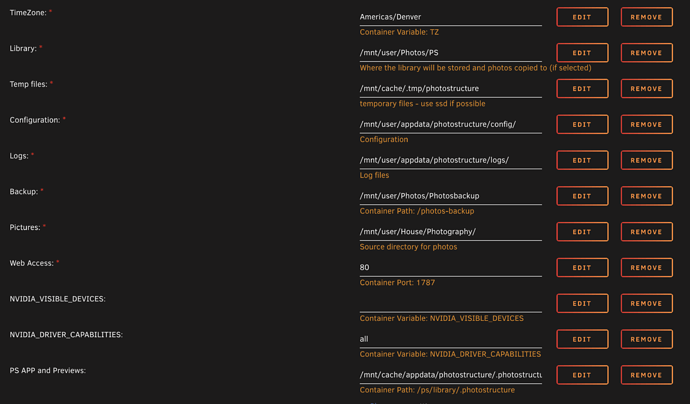

- I setup my docker like this:

- I moved the entire app into that /mnt/cache/appdata/photostructure/.photostructure folder (everything in /ps/library/.photostructure which is in /mnt/user/Photos/PS.

It doesn’t seem to be scanning though, even when I tell it to, it made some logs but not much just the following:

{"ts":1629231478694,"l":"error","ctx":"DbRetries","msg":"Caught db error. Retrying in 1231ms.","meta":"SqliteError: code SQLITE_BUSY: database is locked\nSqliteError: database is locked\n at /ps/app/bin/sync.js:9:706357\n at Function.<anonymous> (/ps/app/bin/sync.js:9:727603)\n at Function.sqliteTransaction (/ps/app/node_modules/better-sqlite3/lib/methods/transaction.js:65:24)\n at /ps/app/bin/sync.js:9:727613\n at Object.t.handleDbRetries (/ps/app/bin/sync.js:9:708372)"}

{"ts":1629231664586,"l":"error","ctx":"EventStore","msg":"maybeSendEvent(): ACCEPT","meta":{"event":{"timestamp":1629231478.963,"exception":{"values":[{"stacktrace":{"frames":[{"colno":843842,"filename":"async /ps/app/bin/sync.js","function":"null.<anonymous>","lineno":9,"in_app":false,"module":"sync"},{"colno":727502,"filename":"/ps/app/bin/sync.js","function":"async Object.t.tx","lineno":9,"in_app":true,"module":"sync","pre_context":["","/*"," * Copyright © 2021, PhotoStructure Inc."," * By using this software, you accept all of the terms in <https://photostructure.com/eula>."," * IF YOU DO NOT ACCEPT THESE TERMS, DO NOT USE THIS SOFTWARE"," */",""],"context_line":"'{snip} (\"Timeout while waiting for external vacuum to complete\")),await o.retryOnReject((async()=>e.inTransaction?t(e.db):h.handleDbRetries((()=>e. {snip}","post_context":["//# sourceMappingURL=sync.js.map"]},{"colno":608825,"filename":"/ps/app/bin/sync.js","function":"async c","lineno":9,"in_app":true,"module":"sync","pre_context":["","/*"," * Copyright © 2021, PhotoStructure Inc."," * By using this software, you accept all of the terms in <https://photostructure.com/eula>."," * IF YOU DO NOT ACCEPT THESE TERMS, DO NOT USE THIS SOFTWARE"," */",""],"context_line":"'{snip} orElse(e,1))}));let u=0;const c=async()=>{var e;try{return await i()}catch(i){if(!1===await(null===(e=t.errorIsRetriable)||void 0===e?void 0 {snip}","post_context":["//# sourceMappingURL=sync.js.map"]},{"colno":7,"filename":"node:internal/timers","function":"processTimers","lineno":500,"in_app":false,"module":"timers"},{"colno":9,"filename":"node:internal/timers","function":"listOnTimeout","lineno":526,"in_app":false,"module":"timers"},{"colno":5,"filename":"node:internal/process/task_queues","function":"runNextTicks","lineno":61,"in_app":false,"module":"task_queues"},{"colno":608485,"filename":"/ps/app/bin/sync.js","function":"null.<anonymous>","lineno":9,"in_app":true,"module":"sync","pre_context":["","/*"," * Copyright © 2021, PhotoStructure Inc."," * By using this software, you accept all of the terms in <https://photostructure.com/eula>."," * IF YOU DO NOT ACCEPT THESE TERMS, DO NOT USE THIS SOFTWARE"," */",""],"context_line":"'{snip} :void 0)),n.unrefDelay(t).then((()=>{if(null==i)throw i=!1,new Error(\"timeout\")}))])}t.thenOrTimeout=o,t.retryOnReject=function(e,t){const i {snip}","post_context":["//# sourceMappingURL=sync.js.map"]}]},"type":"Error","value":"timeout: unhandledRejection","mechanism":{"handled":true,"type":"generic"}}]},"event_id":"2dadd9c28e904d94a19802736180f818","platform":"node","environment":"production","release":"1.0.0+20210812145208","sdk":{"integrations":["InboundFilters","FunctionToString","Console","Http","OnUncaughtException","OnUnhandledRejection","LinkedErrors"]},"message":"timeout: unhandledRejection: Error: timeout: unhandledRejection","user":{"email":"whoopn@gmail.com"},"extra":{"pid":203,"serviceName":"sync","serviceEnding":false,"runtimeMs":217266,"version":"1.0.0","os":"Alpine Linux v3.13 on x64","isDocker":true,"nodeVersion":"16.6.2","locale":"en","cpus":"24 × Intel(R) Xeon(R) CPU E5-2620 0 @ 2.00GHz","memoryUsageMb":32,"memoryUsageRssMb":140,"systemMemory":"1.33 GB / 33.8 GB","ffmpeg":"version 4.3.1","vlc":"(not found)","argv":"[\"/usr/local/bin/node\",\"/ps/app/bin/sync.js\"]"}},"pleaseSend":false,"recentEventCount":0,"maxErrorsPerDay":3}}

{"ts":1629231664629,"l":"error","ctx":"EventStore","msg":"maybeSendEvent(): ACCEPT","meta":{"event":{"timestamp":1629231478.98,"exception":{"values":[{"stacktrace":{"frames":[{"colno":843842,"filename":"async /ps/app/bin/sync.js","function":"null.<anonymous>","lineno":9,"in_app":false,"module":"sync"},{"colno":727502,"filename":"/ps/app/bin/sync.js","function":"async Object.t.tx","lineno":9,"in_app":true,"module":"sync","pre_context":["","/*"," * Copyright © 2021, PhotoStructure Inc."," * By using this software, you accept all of the terms in <https://photostructure.com/eula>."," * IF YOU DO NOT ACCEPT THESE TERMS, DO NOT USE THIS SOFTWARE"," */",""],"context_line":"'{snip} (\"Timeout while waiting for external vacuum to complete\")),await o.retryOnReject((async()=>e.inTransaction?t(e.db):h.handleDbRetries((()=>e. {snip}","post_context":["//# sourceMappingURL=sync.js.map"]},{"colno":608825,"filename":"/ps/app/bin/sync.js","function":"async c","lineno":9,"in_app":true,"module":"sync","pre_context":["","/*"," * Copyright © 2021, PhotoStructure Inc."," * By using this software, you accept all of the terms in <https://photostructure.com/eula>."," * IF YOU DO NOT ACCEPT THESE TERMS, DO NOT USE THIS SOFTWARE"," */",""],"context_line":"'{snip} orElse(e,1))}));let u=0;const c=async()=>{var e;try{return await i()}catch(i){if(!1===await(null===(e=t.errorIsRetriable)||void 0===e?void 0 {snip}","post_context":["//# sourceMappingURL=sync.js.map"]},{"colno":7,"filename":"node:internal/timers","function":"processTimers","lineno":500,"in_app":false,"module":"timers"},{"colno":9,"filename":"node:internal/timers","function":"listOnTimeout","lineno":526,"in_app":false,"module":"timers"},{"colno":5,"filename":"node:internal/process/task_queues","function":"runNextTicks","lineno":61,"in_app":false,"module":"task_queues"},{"colno":608485,"filename":"/ps/app/bin/sync.js","function":"null.<anonymous>","lineno":9,"in_app":true,"module":"sync","pre_context":["","/*"," * Copyright © 2021, PhotoStructure Inc."," * By using this software, you accept all of the terms in <https://photostructure.com/eula>."," * IF YOU DO NOT ACCEPT THESE TERMS, DO NOT USE THIS SOFTWARE"," */",""],"context_line":"'{snip} :void 0)),n.unrefDelay(t).then((()=>{if(null==i)throw i=!1,new Error(\"timeout\")}))])}t.thenOrTimeout=o,t.retryOnReject=function(e,t){const i {snip}","post_context":["//# sourceMappingURL=sync.js.map"]}]},"type":"Error","value":"timeout: unhandledRejection","mechanism":{"handled":false,"type":"onunhandledrejection"}}]},"event_id":"da41be4101b246628b8e329e517bf191","platform":"node","environment":"production","release":"1.0.0+20210812145208","sdk":{"integrations":["InboundFilters","FunctionToString","Console","Http","OnUncaughtException","OnUnhandledRejection","LinkedErrors"]},"extra":{"unhandledPromiseRejection":true,"pid":203,"serviceName":"sync","serviceEnding":false,"runtimeMs":217280,"version":"1.0.0","os":"Alpine Linux v3.13 on x64","isDocker":true,"nodeVersion":"16.6.2","locale":"en","cpus":"24 × Intel(R) Xeon(R) CPU E5-2620 0 @ 2.00GHz","memoryUsageMb":32,"memoryUsageRssMb":140,"systemMemory":"1.33 GB / 33.8 GB","ffmpeg":"version 4.3.1","vlc":"(not found)","argv":"[\"/usr/local/bin/node\",\"/ps/app/bin/sync.js\"]"},"message":"timeout: unhandledRejection: [object Promise]","user":{"email":"whoopn@gmail.com"}},"pleaseSend":false,"recentEventCount":0,"maxErrorsPerDay":3}}

I don’t have enough SSD for my sorted library to live on SSD, but I can support the previews and database on ssd, that is my goal as my theory is that the db is starved for IO sitting on the same drives been hit so hard for the scanning/ingest. I’m just not sure if the inevitable links that are make on the docker container are supported, since now the /ps/library/.photostructure folder isn’t TECHNICALLY right under the /ps/library folder. Now linux usually has no issues but I’ve seen docker having issues.

Should I be doing this another way?

This way perhaps? Hybrid Library