PhotoStructure is an “indy” project – an independent, solo developer (me!), paid for with subscription revenues from you (thank you, subscribers!).

I’ve had a couple people ask how PhotoStructure is developed, so I figured I’d write it down.

Hardware

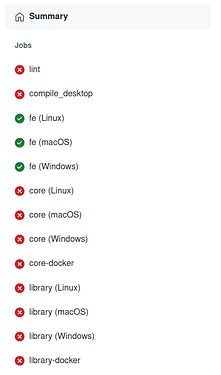

PhotoStructure has a ton of different builds to support cross-platform libraries:

- PhotoStructure for Desktops on macOS, Windows 10+, and Linux

- PhotoStructure for Docker (arm64 and x64)

- PhotoStructure for Node (macOS, Windows, Linux)

This means testing is a bit of a bear, and requires a bunch of hardware. I have:

- My primary Linux/Ubuntu workstation (an AMD 3900x – highly recommended!)

- A 2018 Intel mac mini

- A 2020 M1 mac mini

- An old gaming rig from pre-2010 for Windows 10 testing

- An old laptop for Windows 10 testing

- A PiBox (get a 5% discount via this link!)

- A Raspberry Pi 4

- An old ARM-based DS416j Synology NAS

- A more recent Intel-based DS918+ Synology NAS

- An Intel Core i3-based NUC (which hosts my family’s PhotoStructure library–a bit under-powered to highlight potential performance bottlenecks)

- An Intel Core i3 UnRAID box (for UnRAID testing)

- An Intel Core i3 FreeNAS box (that I need to upgrade to TrueNAS at some point soon)

- Several more virtual machines running on my workstation to simulate Windows 10 on different hardware classes

Software

Given how many different environments PhotoStructure has to support, I try to rely on automated tests to highlight defects before users see them–so PhotoStructure’s “test coverage” is pretty thorough.

- 1,000+ front-end unit tests

- 8,000+ library unit tests

- 500+ library “system” and “integration” tests

This is in addition to all the tests I wrote for the open-source components of PhotoStructure, batch-cluster and exiftool-vendored.

As I develop I have mocha in --watch mode running on another monitor, and it tells me if there are tests that I’ve either newly broken or are no longer correct.

When users find issues, I try to write a breaking test that reproduces their error, and then fix the implementation to make the test pass.

For every new codepath commit, I try to have at least a couple new tests to verify behavior.

I’ve set up “self-hosted” GitHub Actions on all the above hardware marked with a ![]() . After every

. After every git commit, all that hardware runs all the above tests.

GitHub Actions provides a report for what parts of the Continuous Integration matrix passed or failed, and specifically what and how any given part failed.

Here’s a recent, not-so-successful run:

I only release stable builds when the entire CI matrix passes.