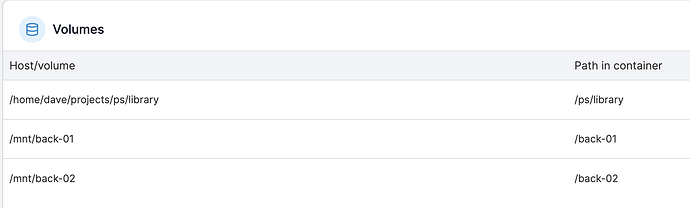

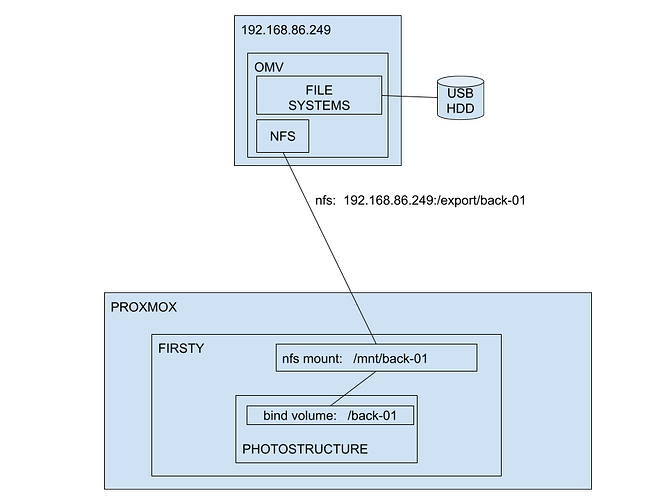

environment:

docker, using portainer

bind mount (host → container):

/mnt/back-01 → /back-01

on host, /mnt/back-01 is an nfs mount to: 192.168.86.246/export/back-01

so, uri from info looks good:

uri: ‘psnet://192.168.86.246/export/back-01/BACKUP/PICTURES/20140420/IMG_2405.JPG’

nativePath shown in worker log when sync command is issued manually shows:

“nativePath”:“/back-01/back-01/BACKUP/PICTURES/20140420/IMG_2405.JPG”},“error”:“p: build(): missing stat info for best file³ at (missing stack)”}}

it appears the 'build() thing has not calculated to the nativePath correctly - and jammed in an additional folder - like the folder name from the host or something.

i did the manual steps above after realizing that multiple hours of syncs produced 0 images in the database.

reviewing the log files showed this happening on every image.

weird that it gets the uri right, but then not the nativePath (but info does get it right).

any thoughts? i can experiment with different mountpoints, trailing slashes, etc. but i do think there is a bug when info acts differently than sync, w.r.t. basic file info.