Feel free to improve on this as I’ve little experience deploying dockers via CLI. Or really, deploying dockers from anything other than the CA plugin in Unraid.

The challenge: I wanted a ‘python3’ environment from which to run ratarmount, so that I could mount google-takeout archives for photostructure import.

Related reading:

As noted in that writeup, make sure you grab the tgz from google takeout. The sizes are (can be) larger and ratarmount will consume them well (zip, maybe not supported yet)

I have a separate docker container to present the mounted contents of a series of google takeout tars. The trick is to specify the bind-mount such that the baseOS (or the Photostructure container) can see the files.

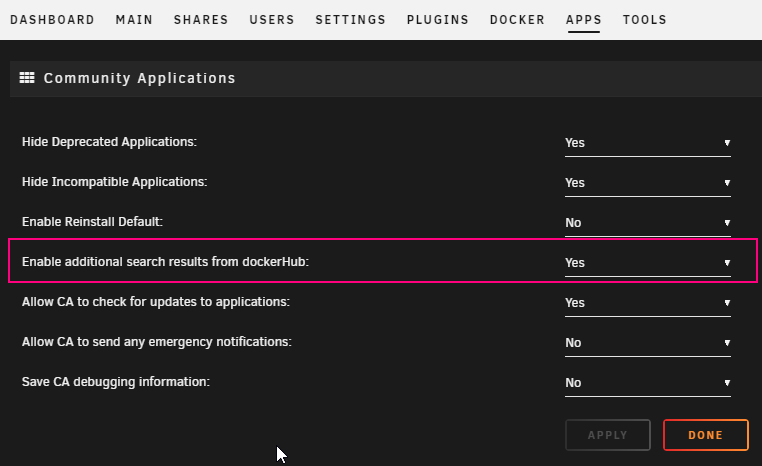

Ah: Enable dockerHub in unraid: (Apps tab–>CA Settings along the left nav)

Here’s the docker line that got me there (using the standard dockerHub ‘python’) and executed from an unraid console:

docker run -d \

-it \

--name <containerName> \

--mount type=bind,source=<src-host>,target=<dst-container>,bind-propagation=rshared \

--cap-add SYS_ADMIN --device /dev/fuse \

python

Then exec in with bash:

docker exec -it <containerName-or-ID> bash

Install fuse tools

apt update; apt-get install python3-fusepy fuse

Install ratarmount

pip install ratarmount

Make your mountpoint, mine only as an example:

root@ae94450dd366:/duplicates# ratarmount *.tgz 20210908_takeout/takeoutmountpoint

Loading offset dictionary from /duplicates/takeout-20210908T180750Z-001.tgz.index.sqlite took 0.00s

Loading offset dictionary from /duplicates/takeout-20210908T180750Z-002.tgz.index.sqlite took 0.00s

Loading offset dictionary from /duplicates/takeout-20210908T180750Z-003.tgz.index.sqlite took 0.00s

Remember that whatever your rartarmount mount point, it’ll be read-only. So you may want to host-map the directory above, that way you can create the .uuid file for photostructure tracking.

Also note that it’ll take some time to initially create the sqlite files for each of your tars. For 110gig of takeout tar, this was about an hour for me. But the cool thing is that it only needs to be created once (thus, why it only took 0.00s in the example above)

Verify that you can see/copy the contents of your ratarmount point from the unraid console. If that looks good, you can have some confidence in the path-mapping to your photostructure container, same way you mapped to your regular photos.

Where the above approach falls short:

The above mount will only be alive while your bash session/console to the python container is up! Or certainly will be lost if the container is ever stopped. I need to understand the ‘Dockerfile’ stuff more or something to make this persist. Ultimately, I want photostructure to import json data (face tagging) from google takeout, as almost all of the photos themselves are already in my normal library. So if the mountpoint stays up long enough for that to happen then it works for me.